“Advanced Engineering Research (Rostov-on-Don)” is a peer-reviewed scientific and practical journal. It aims to inform the readers about the latest achievements and prospects in the field of Mechanics, Mechanical Engineering, Computer Science and Computer Technology. The journal is a forum for cooperation between Russian and foreign scientists, contributes to the convergence of the Russian and world scientific and information space.

Priority is given to publications in the field of theoretical and applied mechanics, mechanical engineering and machine science, friction and wear, as well as on methods of control and diagnostics in mechanical engineering, welding production issues. Along with the discussion of global trends in these areas, attention is paid to regional research, including issues of mathematical modeling, numerical methods and software packages, software and mathematical support of computer systems, information technology challenges.

All articles are published in Russian and English and undergo a peer-review procedure.

The journal is included in the List of peer-reviewed scientific editions, in which the main scientific results of dissertations for the degrees of Candidate and Doctor of Science are published (List of the Higher Attestation Commission under the Ministry of Science and Higher Education of the Russian Federation).

The journal covers the following fields of science:

- Theoretical Mechanics, Dynamics of Machines (Engineering Sciences)

- Deformable Solid Mechanics (Engineering Sciences, Physical and Mathematical Sciences)

- Mechanics of Liquid, Gas and Plasma (Engineering Sciences)

- Mathematical Simulation, Numerical Methods and Program Systems (Engineering Sciences)

- System Analysis, Information Management and Processing, Statistics (Engineering Sciences)

- Automation and Control of Technological Processes and Productions (Engineering Sciences)

- Software and Mathematical Support of Machines, Complexes and Computer Networks (Engineering Sciences)

- Computer Modeling and Design Automation (Engineering Sciences, Physical and Mathematical Sciences)

- Computer Science and Information Processes (Engineering Sciences)

- Machine Science (Engineering Sciences)

- Machine Friction and Wear (Engineering Sciences)

- Technology and Equipment of Mechanical and Physicotechnical Processing (Engineering Sciences)

- Engineering Technology (Engineering Sciences)

- Welding, Allied Processes and Technologies (Engineering Sciences)

- Methods and Devices for Monitoring and Diagnostics of Materials, Products, Substances and the Natural Environment (Engineering Sciences)

- Hydraulic Machines, Vacuum, Compressor Equipment, Hydraulic and Pneumatic Systems (Engineering Sciences)

The editorial policy of the journal is based on the traditional ethical principles of Russian scientific periodicals, supports the Code of ethics of scientific publications formulated by the Committee on Publication Ethics (Russia, Moscow), adheres to the ethical standards of editors and publishers, enshrined in the Code of Conduct and Best Practice Guidelines for Journal Editors, Code of Conduct for Journal Publishers, developed by the Committee on Publication Ethics (COPE).

The journal is addressed to those who develop strategic directions for the development of modern science — scientists, graduate students, engineering and technical workers, research staff of institutes, practical teachers.

About the journal

In September 2020, the scientific journal “Vestnik of Don State Technical University” (ISSN 1992-5980) changed its title.

The new title of the journal is “Advanced Engineering Research (Rostov-on-Don)” (eISSN 2687-1653).

The journal “Advanced Engineering Research (Rostov-on-Don)” is registered with the Federal Service for Supervision of Communications, Information Technology and Mass Media on August 7, 2020 (Extract from the register of registered mass media ЭЛ №ФС 77-78854 – electronic edition)

All articles of the journal have DOI index registered in the CrossRef system.

Founder and publisher: Federal State Budgetary Educational Institution of Higher Education "Don State Technical University", Rostov-on-Don, Russian Federation, https://donstu.ru/

ISSN (online) 2687-1653

Year of foundation: 1999.

Frequency: 4 issues per year (March 30, June 30, September 30, December 30).

Distribution: Russian Federation.

The journal "Advanced Engineering Research (Rostov-on-Don)" accepts for publication original articles, studies, review papers, that have not been previously published.

Website: https://www.vestnik-donstu.ru/

Editor-in-Chief: Alexey N. Beskopylny, Dr. Sci. (Engineering), Professor (Rostov-on-Don, Russia).

Languages: Russian, English

Key characteristics: indexing, peer-reviewing.

Licensing history:

The journal uses International Creative Commons Attribution 4.0 (CC BY) license.

Current issue

MECHANICS

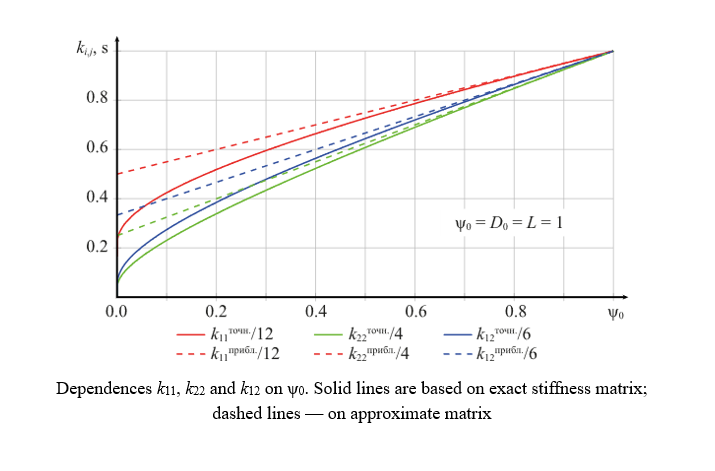

A new approach for calculating beams with variable stiffness along their length is developed. Exact and approximate stiffness matrices for beam finite elements are obtained. Improved calculation accuracy and reduced required model discretization are demonstrated. Criteria for choosing between exact and approximate element stiffness matrices are presented. The results are suitable for integration into structural analysis software packages. The method can be applied to the analysis of reinforced concrete beams, as well as beam stability and dynamics.

Introduction. Modern trends in construction, related to the optimization of weight and materials, require accurate methods for calculating the stress-strain state, particularly of beams with variable stiffness. Analytical calculation of the stressstrain state for such beams is fraught with considerable difficulties, limiting its practical application. Numerical methods, specifically the Finite Element Method (FEM), are widely used to solve these problems, where the law of stiffness change is typically approximated by a piecewise (discrete) function. This study is aimed at the development of an approach based on piecewise-linear approximation of stiffness. Linear stiffness approximation suggests an optimal balance of accuracy and computational resources. This approach provides significantly higher accuracy compared to the traditional discrete approximation with similar computational complexity, allowing for adequate modeling of both smooth stiffness gradients and its violent changes.

Materials and Methods. A first-approximation stiffness matrix for a one-dimensional beam finite element with linearly varying flexural stiffness was derived on the basis of a variational formulation of the problem. An exact stiffness matrix was obtained by direct integration of the differential equation for beam bending. In the calculation examples, an exact solution was obtained using the Maple software package. The numerical solution using FEM was implemented in the author's program written in Python.

Results. During the study, approximate and exact stiffness matrices of the beam finite element were obtained, as well as the vector of nodal reactions (loads) from distributed loads. The efficiency of the proposed approach was demonstrated by numerical examples. The results obtained by the FEM were verified using analytical calculations. Based on the performed calculations, recommendations and criteria for using the exact or approximate stiffness matrix were developed.

Discussion. Finite elements that account for linear change of stiffness along the length make it possible to increase the accuracy of the results and reduce the degree of discretization of the computational scheme by more than two times. The approximate matrix shows good convergence with a smooth change in stiffness along the length. In such cases, discrete approximation is also acceptable. The exact matrix allows for calculating cases where the stiffness within the beam changes by orders of magnitude with low error. The classical discrete approximation in this case does not ensure high accuracy of the calculation results.

Conclusion. The paper presents stiffness matrices for finite elements that account for linear change of stiffness along the length. Their derivation is performed by two methods: on the basis of a variational formulation of the problem, and by direct integration of the differential equation of bending. The resulting matrices enable more accurate stress-strain analysis of beams with variable stiffness. They have an analytical format that simplifies their integration into existing software systems. Further research will be directed towards applying the obtained matrices to the calculation of reinforced concrete beams, considering physical nonlinearity, as well as to solving problems of stability and dynamics of beams with variable stiffness.

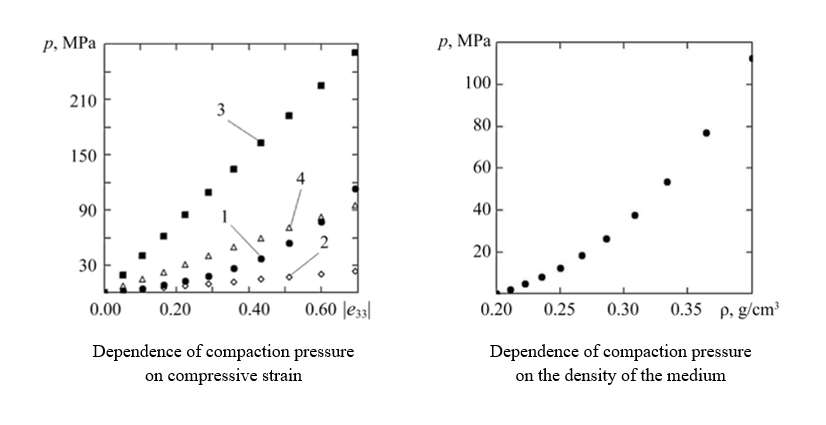

This paper develops a new approach to calculating the compaction pressure of a conglomerate. The model takes into account changes in density and material properties under elastic compression. The method utilizes stepwise loading and the solution to a series of elastic deformation problems. It is shown that the dependence of pressure on compressive strain is nonlinear. It is found that accounting for density variation reduces the error in pressure estimation. The results can be applied in the design of wood waste briquetting processes.

Introduction. Briquetting and pressing of wood and other powdered materials are becoming key processes in the circular economy and recycling of wood processing waste. Accurate calculation of compaction pressure is essential for equipment selection and optimization, making the task of modeling the deformation of conglomerates both practical and economically significant. The literature addresses the mechanics of powder media, porous materials, and the modeling of elastic-plastic deformations of granular conglomerates. However, most models assume fixed mechanical characteristics or approximations that do not account for the dependence of strength and elastic properties on changing density under compression. This leaves a gap in theoretical and applied approaches to adequately calculating pressure for materials with variable density. Therefore, the objective of this work is to develop an approach for calculating the compaction pressure of a particle conglomerate as a function of the degree of elastic compression, taking into account changes in the mechanical characteristics of the medium.

Materials and Methods. In the mathematical description of the research problem, the provisions of the theory of elasticity were used. Based on the principle of superposition, the process of medium deformation was divided into a number of stages, within which the particle conglomerate received a small increment in height, and the mechanical characteristics assumed a constant value. The proposed approach for determining the compaction pressure was based on the solution to a series of inverse elastic problems in which the displacement of the upper boundary of a conglomerate of rectangular particles was specified, and the normal stress that caused this increment was sought. To account for changes in the density of the medium during deformation, the method of sequential loads was used, within each of them, the density was taken to be constant and was determined depending on the magnitude of the total compressive deformation. The Hencky strain, which has the property of additivity, was used as a measure of deformation.

Results. As part of the study, an iterative model was constructed for calculating the compaction pressure of a particle conglomerate when the mechanical characteristics change depending on the degree of elastic compression. Series of test calculations were conducted using a conglomerate of wood particles, whose Young's modulus is described by a power-law density function. At each stage of deformation, the elastic constants of the material were assumed to be constant, depending on the density of the medium. Using the equilibrium equation and the superposition principle, based on the results of solving elastic deformation problems, the compaction pressure was calculated at each loading stage, and the dependence of the compaction pressure on the magnitude of the compressive deformation and the degree of compaction was constructed.

Discussion. The obtained results of deformation of the medium taking into account the change in mechanical characteristics depending on the degree of compression showed a clearly expressed nonlinearity of the curve of dependence of the compaction pressure on the compression deformation — with an increase in pressure, both the degree of compaction of the medium and the compression deformation increase. A comparative analysis of calculations using the example of a conglomerate of wood particles under the condition of a constant density of the medium and taking into account the change in density during the deformation process revealed a significant error in estimating the compaction pressure when averaging the density or when using constant density values corresponding to the initial (undeformed) or final state.

Conclusion. The constructed iterative model allows for calculating the compaction pressure of a particle conglomerate, taking into account changes in mechanical properties under elastic compression. The proposed approach accounts for the nonlinearity of the compaction pressure dependence on the degree of compaction of the medium and can be applied to briquetting processes for wood waste.

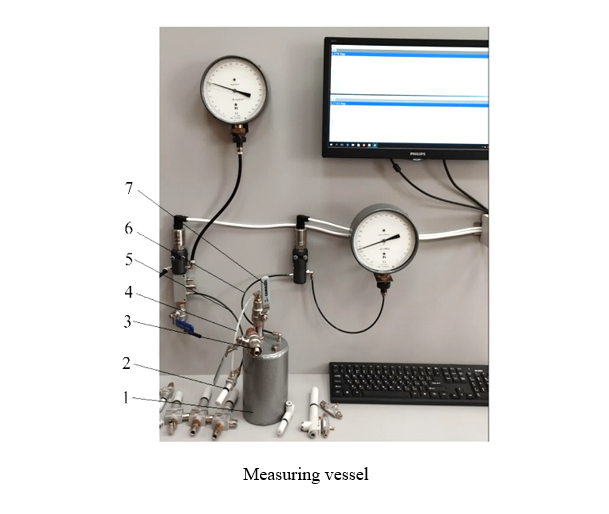

The study has obtained actual values for vessel evacuation time. The time it takes to create maximum vacuum depth for ejectors is measured for the first time. It is shown that actual values exceed catalog values by one-third. A method for selecting supply pressure to reach maximum vacuum is proposed. The results enable more accurate selection of ejectors for specific process applications. The data can be used in the design and modernization of vacuum systems.

Introduction. In industry, the process of obtaining technological vacuum using ejectors that utilize the kinetic energy of a jet of compressed air is widely used. The selection of the required ejector model, as well as their number (when creating a field of ejectors), is performed proceeding from the compliance of the ejector characteristics with the key parameters of the designed process technology. One of the most important characteristics of an ejector, significantly affecting the overall performance of the vacuum system, is the evacuation time of the graduated (calibrated) container. However, in technical literature, this parameter is not specified for the maximum vacuum depth produced by the ejector, nor for the corresponding supply pressure, but for certain, less-defined parameters, referred to as optimal by ejector manufacturers. In such cases, it is impossible to accurately estimate the actual value of an important criterion. Therefore, the objective of this work is to experimentally determine the actual value of the vacuum time of a graduated (calibrated) vessel for various types of ejectors.

Materials and Methods. Experimental studies were performed on a stand specifically designed and manufactured by the authors, which made it possible to study various parameters of vacuum ejectors. In particular, the stand provided establishing the exact time of vacuuming a measuring vessel using ejectors with a nozzle diameter from 0.1 to 4.0 mm at a supply pressure value that induced the maximum vacuum depth for each model under study. The research was carried out using the most popular vacuum ejectors of the VEB, VEBL, VED and VEDL families manufactured by Camozzi at a pre-determined, precisely set input supply pressure for each ejector size. The actual values of the vacuum time at the highest vacuum depth for each ejector were experimentally determined.

Results. It has been established that the performance of VEB, VEBL, VEDL, and VED series ejectors differs from that stated in the manufacturer's catalog. The time required to reach maximum vacuum for each ejector exceeds the manufacturer's specifications by 25–40%, which impacts the performance of the vacuum system.

Discussion. The experimental data have shown that the actual values of the vacuum time of the measuring vessel differ from the values given in the catalogs of manufacturers of ejectors. This difference is explained by the fact that when conducting appropriate tests, manufacturers are guided not by the maximum vacuum depth created by the ejector, but by the vacuum depth created by a certain “optimal” (the wording of the ejector manufacturer) value of the supply pressure. In almost all the cases considered by us, this “optimal” supply pressure produced a vacuum, whose depth differed from the maximum. In this regard, it seems advisable to adjust the value of the inlet supply pressure to attain the maximum vacuum depth for each type of ejector.

Conclusions. The results of the obtained values of the vacuum creation time in one liter of volume at the maximum depth of the vacuum produced by the ejector provide a more accurate selection of vacuum ejectors depending on the required process tasks, ensure the greatest efficiency and cost-effectiveness of automated vacuum systems. The research results can be used by all ejector manufacturers to adjust their basic catalogs and appropriate recommendations for the use of these products. Further research will be conducted to study the accuracy of the geometric shapes of the surface of the ejector channel, the purity of processing, and their production technology, which affect the passage of air flow.

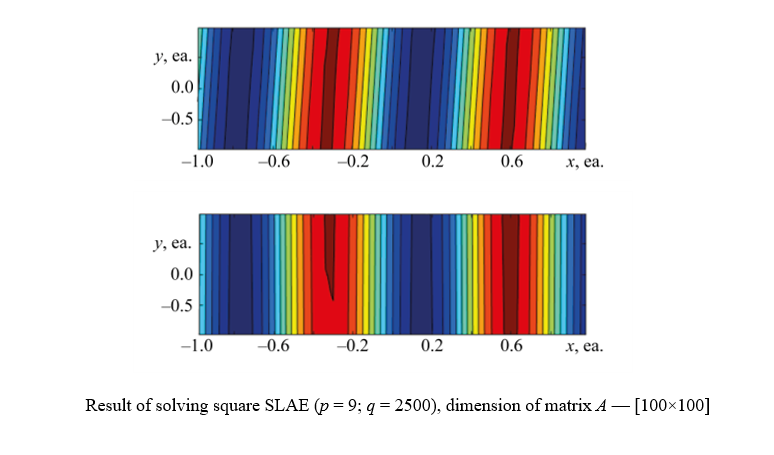

A method for early control of resonator density nonuniformity is developed. The method relates the optical properties of quartz glass to its density distribution. Density is reconstructed from measurements of the light beam passing through a workpiece. The density distribution with a deviation of no more than five percent is obtained. The method is sensitive to volume defects such as pores and bubbles. The results are applicable to optimizing the production of high-precision attitude control systems.

Introduction. The implementation of high-precision attitude control systems of a new generation with improved technical characteristics remains a key task in precision instrumentation — this is required for the reliable operation of moving objects with a long service life. One of the promising ways is the use of sensors based on the Bryan effect (hemispherical resonator gyroscopes, HRG),), which show significant advantages in stability of characteristics under external factors. Over the past 10 years, foreign and domestic research has reached noticeable success in increasing the target parameters of HRG, however, certain improvement problems remain open. Thus, in the literature, attention is paid to reducing the errors in measuring the HRG through compensating for the impact of imperfections of the resonator, but more often these methods are applicable at stages after geometry generation. Methods for early identification of material inhomogeneities (density variation) during workpiece inspection are insufficiently developed, creating a gap in the process chain and reducing the efficiency of subsequent balancing and calibration. The objective of this study is to develop a method for identifying resonator density variations at an early stage of the process — during workpiece inspection.

Materials and Methods. An optically transparent material is considered – fused quartz glass, which is the most common material for making a HRG resonator, in particular, the KU-1 brand (foreign analogs — Corning HPFS 7980, JGS1). The identification method is based on the relationship of the optical properties of quartz glass (absorption coefficient) with the desired density distribution over the volume of the workpiece. A virtual experiment was conducted, which consisted in the formation and resolution of a system of linear algebraic equations (SLAE) based on the measurements series results of a light beam intensity passing through a workpiece. A polynomial approximation was used to describe the density distribution in order to increase the robustness of the method. The SLAE roots were obtained through finding a pseudosolution by the least square method based on the singular value decomposition.

Results. A method for identifying the density variation of quartz glass at the stage of quality control of the technological workpiece of the HRG resonator was developed. The desired density distribution of quartz glass over the volume of the workpiece was obtained, coinciding with the “true” one — the difference was no more than 5%. The sensitivity of the method to the presence of macrodefects in the volume of the workpiece (pores, bubbles, etc.) was assessed.

Discussion. The results show that the proposed method can effectively control the density variation of the workpieces and optimize the resonator production, thereby improving the efficiency of the processes and minimizing the impact of imperfections on their characteristics. Virtual experiments have demonstrated that measuring the light beam intensity passing through the workpiece allows for the accurate reconstruction of the absorption coefficient and density distribution with an accuracy of at least 0.005%. The developed system of linear algebraic equations (SLAE) makes it possible to determine these parameters by volume. The paper highlights some features related to solving uncertain SLAE. Particular attention is paid to the need to control the ratio between the number of roots and unknowns to obtain a stable solution.

Conclusion. The proposed method for identifying the density variation of quartz glass at the stage of workpiece quality control in the production of HRG resonators demonstrates high efficiency and accuracy. The presented method has high accuracy for describing the distribution function, and is also flexible in terms of obtaining the optimal dimension of the SLAE, which is directly related to the number of experiments performed. The obtained results confirm the applicability of the material optical properties for controlling the density distribution over the volume, which allows for improved control of workpieces and optimization of production processes. The required measurement accuracy, determined by the level of density variation that affects the HRG characteristics, is practically achievable, which indicates that the method can be used in the manufacturing process. This approach can be applied in future research and development of highprecision systems, which will contribute to progress in the precision instrumentation industry and improve the quality of manufactured products.

This paper proposes a model for energy dissipation in a multilayer pavement. It is shown that strengthening the upper part of the roadbed reduces energy loss. The decisive impact of the subgrade rigidity on the magnitude of dissipation is established. Numerical modeling is consistent with field measurements through pulse loading. It is demonstrated that reinforced bases reduce energy dissipation by more than twofold. The results can be applied to the design of durable and cost-effective roads.

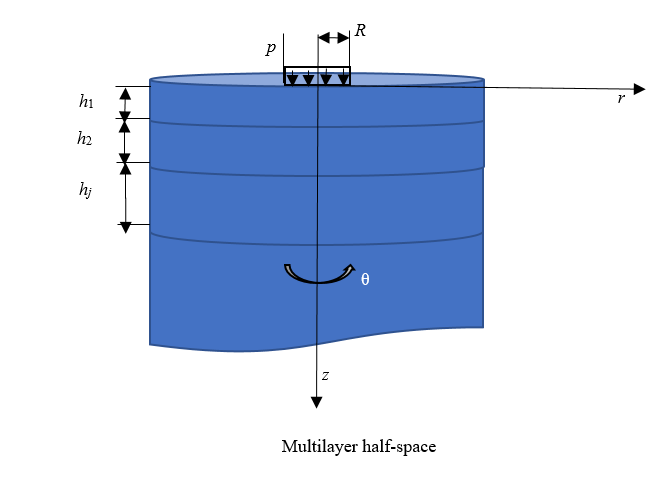

Introduction. The design of road pavements for highways is a key stage of project development, directly impacting their durability and operational costs. In recent years, in the context of increasing traffic intensity and dynamic loads, technologies for strengthening roadbeds and bases, such as geosynthetic reinforcement and stabilized layers, have become widespread, making the study on their efficiency a challenge. Literature notes the practical advantages of reinforced layers — increased load-bearing capacity and reduced deformation. However, models for energy dissipation under dynamic impacts in structures with such layers are underdeveloped. Theoretical approaches to analyzing energy dissipation, including linear-elastic and viscoelastic models and finite element methods, have been primarily applied to traditional structures. Their adaptation to reinforced and stabilized layers requires further development, as there remain gaps in the quantitative comparison of efficiency by location and rigidity of reinforcements. The objective of the presented work is to analyze the dissipation of deformation energy in the structure of road pavements with different options for the arrangement of reinforced layers, and to determine optimal design solutions that contribute to increasing the durability of road pavements. To achieve this, it is required to formalize an energy dissipation model for structures with reinforcements, conduct a comparative analysis of different locations and rigidity levels of the layers.

Materials and Methods. The research utilized a comprehensive approach to the analysis of deformation processes in layered media using road pavements as an example, involving both a calculation tool and modern experimental equipment. As a calculation tool, a mathematical model of a layered half-space in an axisymmetric formulation in a cylindrical coordinate system was used. It was based on the solution to the system of dynamic Lame equations and allowed for the construction of amplitude-time characteristics of vertical displacements and impact loading impulse, on the basis of which it was possible to construct dynamic hysteresis loops. The FWD PRIMAX 1500 shock loading unit was used as experimental equipment, which made it possible to register similar characteristics of the road pavement response under field conditions at a load equivalent to the calculated one.

Results. The study involved numerical modeling of road pavement structures traditionally used in the Russian Federation and so-called full-depth road pavements, which were composed almost entirely of materials reinforced with binders. Dynamic hysteresis loops were constructed, and a comparative analysis of the results was provided. A numerical experiment revealed that strengthening only the subgrade layer, even without installing a reinforced base layer beneath the asphalt concrete, reduced the amount of dissipated deformation energy. It was also concluded that the elastic modulus of the underlying half-space simulating the subgrade had the greatest impact on the amount of dissipated energy.

Discussion. The greatest effect, both technical and economic, can be reached by strengthening the top of the roadbed while preserving the loose layers in the base of the road structure. This solution will bring the functioning of the road surface closer to the elastic stage and at the same time reduce the risk of cracks appearing on the surface of the pavement due to an excessively rigid layer of reinforced base.

Conclusion. On the basis of the constructed dynamic hysteresis loops, it is shown that a reduction in the magnitude of deformation energy can be obtained both by installing reinforced layers of the road surface throughout its entire depth, and by locally strengthening the underlying half-space layer and an additional base layer made of sand. The numerical experiment demonstrated that the use of reinforced base layers reduced the amount of deformation energy dissipation in the pavement structure by more than 2–3 times. Qualitative agreement between the experimental results and the numerical simulation results was shown.

INFORMATION TECHNOLOGY, COMPUTER SCIENCE AND MANAGEMENT

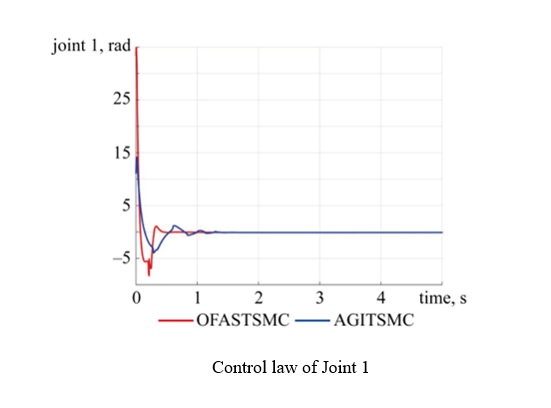

A new method for manipulator control with finite convergence time is proposed. The method estimates unknown disturbances and actuator failures in real time. Adaptive gain tuning reduces control overestimation. Smoothed super-twisting reduces chattering and maintains system robustness. Numerical tests demonstrate improved accuracy and smoothness of trajectory tracking. The method is suitable for industrial, medical, and service robotics.

Introduction. Robotic manipulators operate in dynamic environments under uncertainties, external disturbances, and actuator faults, posing a critical challenge to their control design. While traditional control strategies, such as PID or computed torque control, offer simplicity, they often lack robustness to unmodeled dynamics. The development of robust and practically implementable control algorithms is becoming increasingly important with the growing use of manipulators in dangerous, precise and ultra-fast operations (industrial automation, medicine, space and service robots). Conventional PID controllers and torque calculation methods are simple but not robust enough to handle unmodeled effects. Sliding Mode Control (SMC), particularly the Super-Twisting variant (STA), provides strong robustness, but suffers from chattering and typically requires prior knowledge of system bounds. Recent advancements like Adaptive Global Integral Terminal Sliding Mode Control (AGITSMC) improve finite-time convergence but may result in overestimated control gains and residual switching effects. This research addresses a critical gap in current methods: the lack of a unified control approach that ensures finite-time convergence, suppresses chattering, and compensates for both unknown disturbances and actuator faults using observer feedback. The objective of this work is to design and analyze an Observer-Based Finite-Time Adaptive Reinforced Super-Twisting Sliding Mode Control (OFASTSMC) framework that adaptively adjusts its gains, estimates disturbances online, and guarantees smooth, robust performance even in the presence of severe nonlinearities and faults. The objective of this study is to develop and analyze an Observer-Based Finite-Time Adaptive Reinforced Super-Twisting Sliding Mode Control (OFASTSMC) framework that unifies finitetime observer feedback, adaptive gain tuning, and reinforced sliding surfaces to achieve robust trajectory tracking of robotic manipulators under disturbances and actuator faults, while effectively minimizing chattering and ensuring practical implementability.

Materials and Methods. This study considers the standard dynamic model of an 𝑛𝑛-DOF robotic manipulator derived using Lagrangian mechanics. The model accounts for nonlinear coupling effects, viscous friction, external disturbances, and additive actuator faults. To achieve robust finite-time control, a reinforced sliding surface is constructed using nonlinear error terms with adaptive power exponents, which accelerates error convergence. A finite-time extended state observer (ESO) is incorporated to estimate lumped disturbances and actuator fault torques in real time. Based on these estimates, the control law integrates a super-twisting sliding mode algorithm with adaptive gain tuning and boundarylayer smoothing to reduce chattering while ensuring strong robustness. The closed-loop system stability is formally analyzed within a Lyapunov framework, where rigorous proofs confirm finite-time convergence of the tracking error under the proposed controller. The proposed OFASTSMC algorithm is implemented in MATLAB/Simulink and validated on a 2-DOF planar robotic manipulator. The manipulator is subjected to time-varying disturbances and actuator degradation scenarios. For benchmarking, the method is directly compared with AGITSMC, using identical initial conditions, model parameters, and reference trajectories to ensure a fair and consistent performance evaluation.

Results. Simulation results demonstrate that the proposed OFASTSMC method significantly outperforms the benchmark AGITSMC in terms of tracking precision, robustness, and control smoothness. Specifically, the maximum joint position errors were reduced by over 40% compared to AGITSMC, and the settling time to reach the desired trajectory was shortened by approximately 25%. Additionally, the proposed method effectively mitigated chattering in the control signal due to the use of saturation functions and gain limits, resulting in smoother actuator commands. The adaptive observer accurately estimated the lumped disturbance and fault inputs in real time, providing effective fault compensation without prior knowledge. These improvements were validated across multiple scenarios including abrupt actuator failures, nonlinear load torques, and varying trajectory speeds. The sliding surface convergence was achieved in finite time, confirming the theoretical guarantees of the method.

Discussion. The results validate that OFASTSMC achieves robust, high-precision tracking for robotic manipulators operating under real-world uncertainties. Its novelty lies in the integration of adaptive exponent tuning, finite-time observer feedback, and gain-limited super-twisting control into a unified and practical framework. Unlike previous methods that rely on fixed gain structures or ignore observer feedback, OFASTSMC adapts in real-time and maintains finite-time convergence guarantees with minimal chattering.

Conclusion. The results obtained confirm that OFASTSMC is an efficient and robust solution to the trajectory tracking problem in the presence of uncertainties. The method is computationally efficient and easy to implement in digital control systems, making it suitable for practical deployment in industrial robots, service manipulators, or surgical arms. Future research will focus on extending this method to task-space control and real hardware implementation under sensor noise and model mismatches.

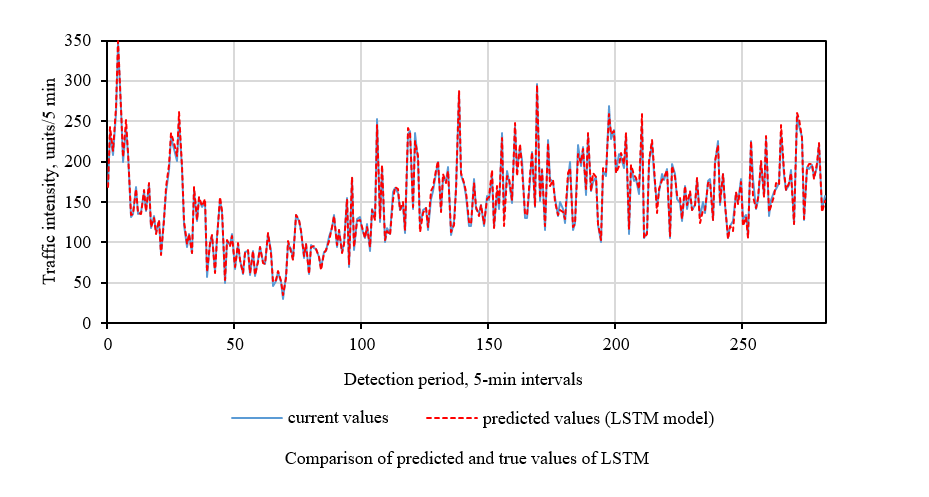

When choosing between a neural network and a classical machine learning model for short-term traffic flow forecasting on a highway, preference should be given to a neural network architecture — specifically, LSTM. It is shown that a model with long short-term memory produces more accurate prediction. Its architecture better captures the temporal structure and complex dynamics of traffic. The support vector machine produces higher errors during sudden changes in flow. The results can be applied to reducing congestion and emissions on highways. The approach is useful for the development of intelligent transportation systems in cities.

Introduction. With highway congestion increasing, the efficiency of intelligent transportation systems depends on highquality short-term traffic prediction. Statistical methods do not adequately account for nonlinear and dynamic traffic changes. Long short-term memory (LSTM) and support vector machines (SVR) offer more promising solutions. However, they are not ranked in terms of accuracy, as there are no studies comprehensively comparing their adequacy for shortterm traffic flow prediction. The proposed study fills this gap. The research objective is to compare the accuracy of LSTM and SVR, and select the optimal approach for traffic flow prediction on Shenzhen Meiguang Expressway.

Materials and Methods. Traffic detector data was collected on the Meiguan Expressway in June 2021. Data preprocessing methods were used, including weighted mean imputation and normalization. Autocorrelation analysis was used for feature extraction, along with the creation of an interaction variable between speed and detector occupancy. Models were trained and tested on data collected from detectors at 5-minute intervals.

Results. LSTM performed 17.86% better in terms of root mean square error, 19.82% better in terms of mean absolute error, and 25.78% better in terms of mean absolute percentage error. In periods with the lowest flow rate prediction error, RMSE, MAE, and MAPE for the LSTM model were 36.5%, 34.3%, and 42.3% lower, respectively. In periods with the highest error, RMSE, MAE, and MAPE for the LSTM model were 73.2%, 65.4%, and 64.4% lower, respectively. The Wilcoxon signed-rank test <0.05 confirmed the statistical significance of the differences.

Discussion. The superior predictive performance of LSTM stems from its architecture, namely, the combination of interaction variables and lag metrics. LSTM accounts better for flow time dependences, adapts to complex, long-term dynamic changes, and remains accurate even with significant fluctuations. The lower predictive performance of SVR stems from its weak, nonlinear approximation ability. Sudden flow changes increase significantly error rates.

Conclusion. When choosing between a neural network and a machine learning model for short-term traffic flow prediction on an expressway, the neural network model, such as LSTM, should be preferred. These research results can be useful in predictive strategies for reducing congestion. Short-term prediction based on LSTM can serve as a basis for optimizing traffic management, reducing congestion and pollutant emissions, and for optimizing intelligent transportation systems. A promising direction is the development of hybrid architectures that integrate contextual data (weather, infrastructure, accidents) to improve real-time predictions.

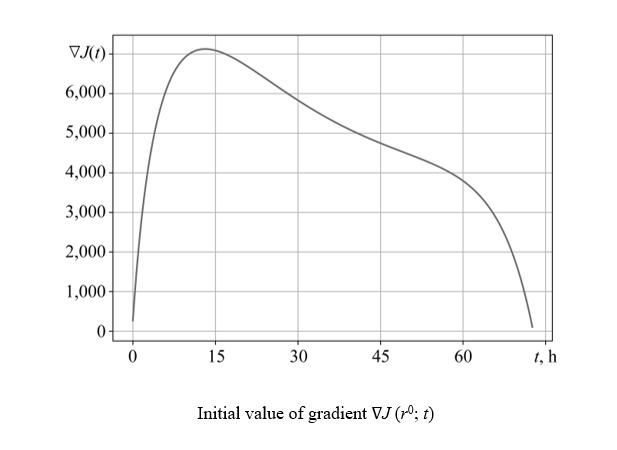

This paper proposes an algorithm for direct identification of the activity function. The algorithm is based on an extremal approach with a controlled descent direction. An analytical expression for the gradient is obtained through the solution to the adjoint problem. It is shown that the convergence of the proposed method is accelerated tenfold compared to the gradient method. The limits of identifiability are related to the inertia and response time of the network. The method is applicable to modeling information flows in social networks.

Introduction. Improving the accuracy of mathematical models for disseminating information in social networks is directly related to the ability to correctly identify their parameters. In numerous papers, the fundamental complexity of this problem is actually bypassed by substituting the direct identification of the desired functions for the selection of parameters for their heuristic approximations, which inevitably leads to a decrease in both the accuracy and universality of the model. In the linear diffusion model describing the spatiotemporal dynamics of information, one of the key parameters is the function characterizing user activity. The objective of this study includes the development and numerical implementation of an algorithm for direct parametric identification of user activity functions based on a direct extreme approach, which makes it possible to completely abandon heuristic approximations, and the evaluation of its computational efficiency in comparison to the classical gradient method.

Materials and Methods. A direct extreme approach was used to solve the parametric identification problem. Unlike the classical steepest descent technique, the proposed method with adjustable descent direction adapted the search trajectory to local features of the quality functional through introducing a control parameter. The numerical solution to the direct and adjoint problems was implemented using an implicit finite-difference scheme. The method was verified using synthetic data.

Results. For the identification algorithm, an analytical expression of the gradient of the target functional was obtained through the solution to the adjoint problem. The identifiability limits of the desired parameter conditioned by the inertia of the diffusion process and the network response time were determined. A comparative study of gradient algorithms was conducted. The classical steepest descent approach demonstrated slow and uneven convergence, requiring 13,217 iterations to reach the stopping criterion, whereas the method with adjustable descent direction provided convergence to the same level of accuracy in 376 iterations.

Discussion. The obtained results confirm the theoretical assumptions about the need to take into account the spatial heterogeneity of the functional gradient when solving infinite-dimensional optimization problems. The classical gradient technique exhibits low efficiency in reconstructing nonstationary parameters due to gradient nonuniformity, while the method with adjustable descent direction reaches uniform and rapid convergence. This demonstrates that adapting the algorithm to the specifics of an infinite-dimensional problem is a key success factor. The main contribution of the research is the development of a computing apparatus for the direct determination of functional parameters, which expands the methodological arsenal for analyzing systems described by partial differential equations.

Conclusion. The key findings of this research are the development and verification of an efficient algorithm for direct identifying user activity functions in a linear diffusion model of a social network. The practical significance consists in the creation of more accurate and interpretable tools for modeling information flows without resorting to a priori approximations. The developed algorithm has demonstrated significant advantages in speed and convergence. However, the interpretation of the physical meaning of the identified function within this model requires further development. A promising direction is the application of the method to more sophisticated models that take into account the spatial heterogeneity of user activity, as well as its extension to the identification of the function vector.

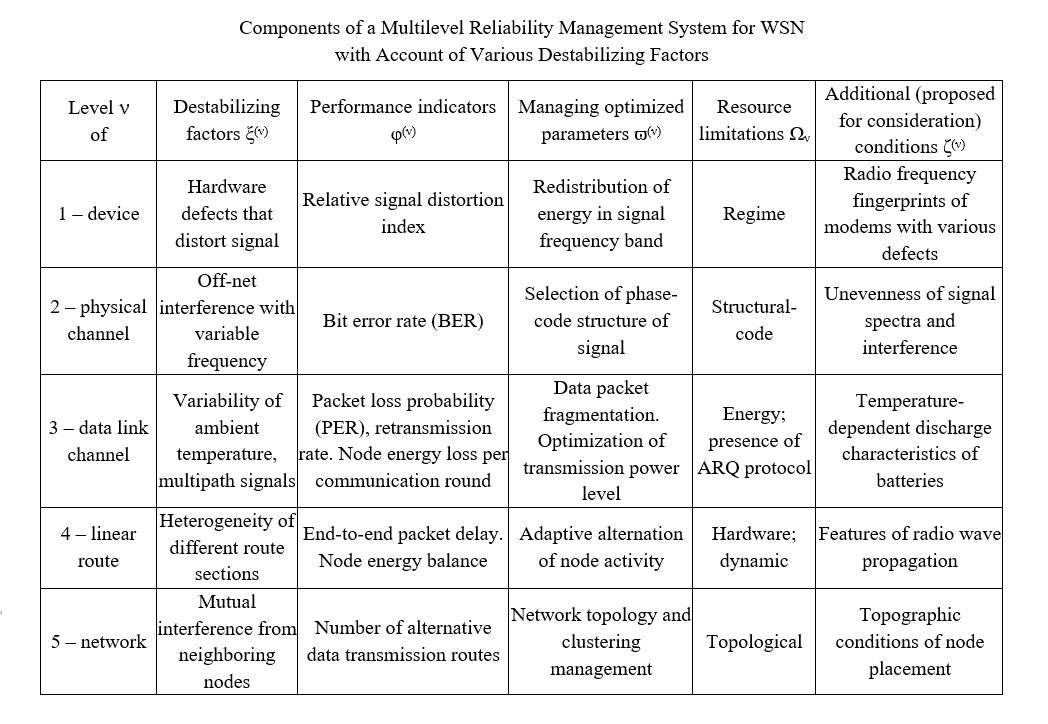

The author proposes a multilayered approach to improving the reliability of sensor networks. The model integrates network parameters, hardware properties, and environmental effects. Increased network resilience, reduced latency, and lower energy consumption are demonstrated. Relationships between performance indicators, destabilizing factors, and control factors are established. The approach is applicable to monitoring agricultural facilities and precision farming systems. The results can be used in the design of sustainable digital agricultural systems.

Introduction. In the context of digitalization of the agricultural sector, precision farming becomes a key driver of sustainability: wireless sensor networks (WSN) provide continuous monitoring of edaphoclimatic parameters and plant health, supporting yield forecasting and resource optimization while reducing operational risks. Despite significant progress in research on energy efficiency, routing, and topologies of WSN, the issue of their systemic reliability in real agricultural scenarios has been addressed only fragmentarily. Existing theoretical approaches rely on graph theory, Markov and quasideterministic models to assess connectivity and fault tolerance but do not sufficiently account for battery degradation, radio channel variability, and external factors (microclimate, interference), as well as their combined effects. The objective of this article is to develop a methodological approach to enhance the reliability of WSN for monitoring agricultural objects through a multilevel model that integrates network parameters, hardware properties, and external actions.

Materials and Methods. To develop the model, methods of system analysis were used, including analysis and synthesis of previously known models and algorithms for controlling the WSN for various levels of network interaction. At the first stage, analytical models of each level were examined: operating conditions of radio devices; physical channels with interference and hardware distortions; energy losses of nodes in channels with variable environmental characteristics; linear WSN with heterogeneous radio communication segments and clustering of WSN. At the second stage, an analysis of WSN control algorithms was conducted: selection of transmission modes with minimal signal distortion; optimization of signal structure with minimal Bit Error Rate (BER); control of data packet length and transmitter power; balancing of energy losses in relay nodes, as well as routing with minimal time and energy losses. At the third stage, the synthesis of the obtained results was performed, presenting a hierarchical monitoring infrastructure for the agricultural object that considered all levels of WSN interaction, parameters of sensor nodes, and the external actions.

Results. A methodological multilevel approach to increasing the reliability of WSN for monitoring agricultural facilities has been proposed and substantiated. This approach integrates network parameters, equipment properties, and external actions. It is validated by modeling the improvement of energy efficiency, reduction of delays, and increase in fault tolerance. Within this framework, a five-tier hierarchical concept of multilevel network infrastructure for monitoring agroindustrial objects based on WSN has been developed. It incorporates models and algorithms at the levels of: devices, physical channels, data transmission channels, linear routes, and networks. Single-level and inter-level dependences linking performance indicators, destabilizing factors, and controllable parameters have been established.

Discussion. The presented approach addresses the gap between energy models and the consideration of dynamic/information constraints of nodes, while also taking into account the actual operating condition of modems, and the thermal dependence of power sources. The multilevel integration of criteria (from signal shape correlation indicators to network probabilistic metrics of WSN integrity) allows for the alignment of local optimization and system goals, reducing the risk of conflicts between levels. The principle of level matching and external augmentation provides iterative adjustments of requirements and parameters, which increases the robustness of decision-making to environmental uncertainty and channel heterogeneity. Constraints of the current work include the need to calibrate models for specific hardware profiles, the dependence of efficiency on available PHY/MAC modes and ARQ protocols, and sensitivity to the accuracy of interference environment and temperature assessments.

Conclusion. The developed models and algorithms across five levels provide the specified metrics of interference resilience, delivery time and energy consumption with the minimum required involvement of resources, which increases the survivability and service life of the WSN. The proposed approach creates the basis for the transition to systemically designed, reproducible solutions in precision agriculture. It reduces resource costs and environmental impact, and also increases the sustainability and profitability of agricultural production. Scaling requires field testing and publication of reference configurations and codes for reproducibility.